Project information

- Category: Computer Vision/Deep Learning

- Type: Convolutional Neural Network

- Client/Purpose: This experiment was aimed to learn the style transfer application of convolutional neural networks

- Project date: October, 2021

- Project URL: github

Utilizing deep learning for style transfer between images

The goal was to implement the style transfer technique from "Image Style Transfer Using Convolutional Neural Networks" (Gatys et al., CVPR 2015)

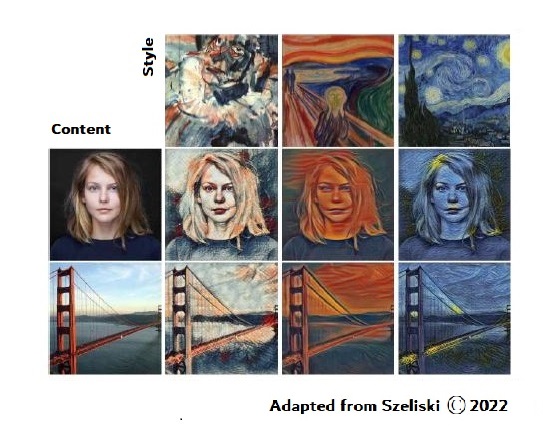

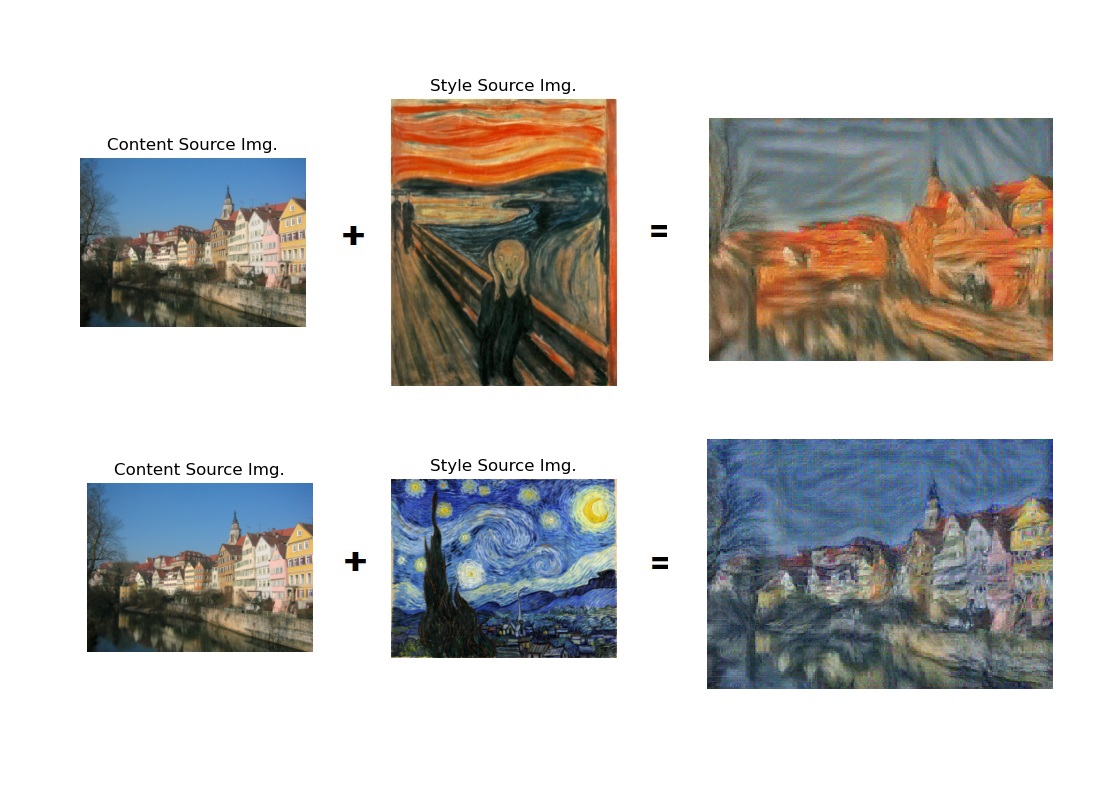

The technique consists of taking two images (a content image and a style image), and then producing a new image that reflects the content of one but the artistic style of the other.

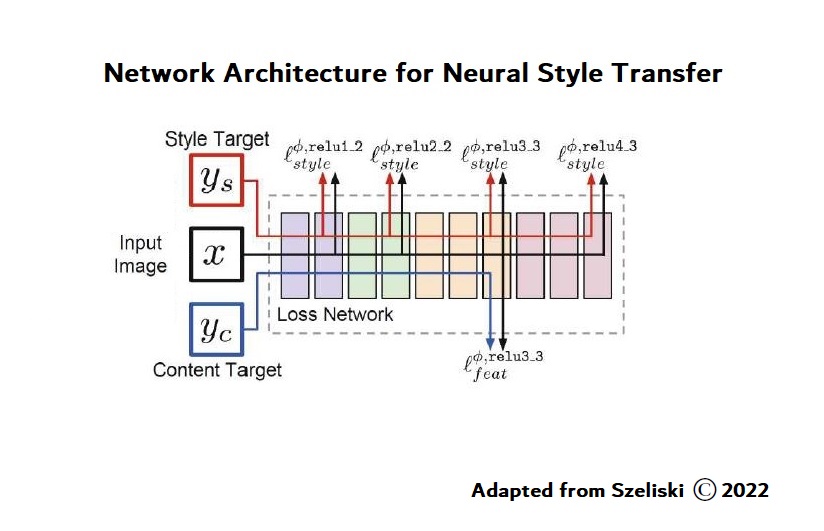

This is accomplished by formulating a loss function that matches the content and style of each respective image in the feature space of a deep network. We want to penalize deviations from the content of the content image and deviations from the style of the style image and perform gradient descent not on the parameters of the model but the pixels of the original image.

To illustrate the process, a diagram of the architecture is shown. During training the content target image yc is fed into the image transformation network as an input x, along with a style image ys, and the network weights are updated so as to minimize the style reconstruction loss l_style and the feature reconstruction loss l_feat.